2023-06-30

It's time to say goodbye!

After 45 months, RISE officially ended in May 2023. As the project concludes its remarkable journey, we want to express our gratitude to all project members and stakeholders. Over the past four years, we have achieved and gone beyond the overall goals of RISE together in advancing earthquake risk reduction capabilities for a resilient Europe. Our diverse team of experts from various fields worked collaboratively, embracing an interdisciplinary approach. To all team members, partner organisations, and stakeholders, thank you for your invaluable contributions and support. We are immensely proud of what we have accomplished together.

Although the chapter of RISE has ended, the legacy of our products and scientific advancements lives on and will continue to thrive. Therefore, the RISE website will continue providing access to all its contents, including reports, deliverables, news articles, and more. However, the website will not be updated anymore. For a comprehensive overview of the project's highlights, milestones, and additional information, we encourage you to explore our final RISE newsletter.

Let us carry the spirit of collaboration, innovation, and resilience into our future endeavours. The knowledge and experiences gained within RISE will continue to shape our paths and contribute to the greater good.

2023-05-27

RISE Final Meeting

On May 25 and 26, the RISE community gathered for the last time at the RISE Final Meeting. It took place in Lugano, a Swiss city at the Lago Maggiore. For two days, all work packages presented their highlights and learnings of the last four years, the challenges they faced, and reflected on future directions they will target after RISE.

Besides the talks about the project itself, there were many opportunities to listen to exciting science outside RISE. Nicolas van der Elst (USGS) and Daniela Di Bucci (Italian Civil Protection) attended the meeting as guest speakers and provided insights into the practical implications of Operational Earthquake Forecasts (OEF) from a USGS and Civil Protection perspective respectively. Further, Domenico Giardini (ETHZ), Ramon Zuniga (UNAM, RISE SAB), Iunio Iervolino (UNINA), Florian Haslinger (ETHZ & EPOS), and Fabrice Cotton (GFZ & EFEHR) held presentations on topics related to and beyond RISE.

The Final RISE Meeting officially ended with feedback from Egill Hauksson (Caltec) and Ramon Zuniga (UNAM) from the Scientific Advisory Board (SAB), and closing remarks from Stefan Wiemer, the RISE project coordinator.

To bring the project to a successful conclusion, there are now some administrative tasks to be completed. Even though RISE is ending, its legacy will continue to grow in other projects and initiatives in the future.

Interested in reading more about RISE's research? Then check out the list of publications!

2023-03-16

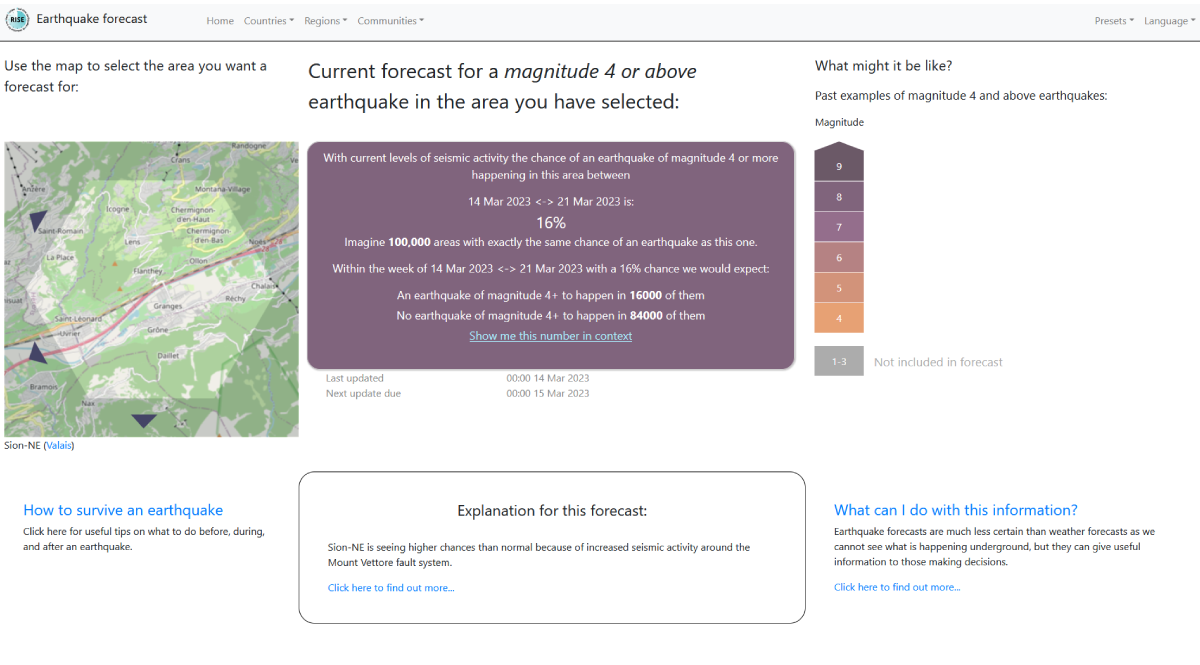

Earthquake forecast communication: 13 good practice guidelines

Perhaps the most important, but also the most difficult questions that seismologists are asked: "What is the chance of a damaging earthquake happening in this area, and if it does, what are its likely effects?" This is the basis for operational earthquake forecasting (OEF) and operational earthquake loss forecasting (OELF). The answers will always have a lot of uncertainty because earthquakes cannot be predicted. However, we can sometimes tell that they are more likely in certain areas due to movements that have been detected in the earth's crust. Forecasts like this require careful, precise, honest and unambiguous communication - sometimes to people with little or no grounding in the geological principles underlying the information. They are not warnings, and they are not giving people a 'message' – telling them to do or not do something – they are more complex to communicate than that. How to best deal with this challenge? 13 best practice tips can guide you in communicating OEF.

Read more about earthquake forecast communication in this report!

2023-02-15

Accuracy and precision of earthquake forecasts using the new generation catalogues

Enhanced seismic catalogues represent a unique occasion to test whether we can increase aftershock predictability by feeding them into well-established short-term earthquake forecast models. In their recent paper, RISE modellers Simone Mancini, Margarita Segou, Max Werner, and co-authors performed a retrospective experiment to forecast the M3+ seismicity of the 2016-2017 Central Italy sequence. They took advantage of an exceptional suite of enhanced catalogues developed in the context of the NERC-NSFGEO funded project “The Central Apennines Earthquake Cascade Under a New Microscope”, which also supported the present research. Employed datasets featured automated detections, re-evaluated magnitudes and improved hypocentral resolution by means of double-difference relocations (CAT4), as well as a machine-learning-derived catalogue of ~900,000 earthquakes (CAT5).

The group developed a set of standard Epidemic-Type Aftershock Sequence (ETAS) and state-of-art Coulomb Rate-and-State (CRS) models using catalogues featuring a bulk completeness lower by at least two magnitude units compared to the real-time catalogue. This made it possible to inform both types of forecasts considering seismic sources with minimum triggering magnitudes down to M1. Model performance was tracked by means of standard CSEP likelihood-based metrics such as the T-test and benchmarked against that of the same models when they learn from real-time data only.

Despite the impressive amount of additional information provided by the enhanced catalogues, this new set of models did not show statistically relevant improvements compared to the near real-time counterparts in terms of average daily information gain (Figure 1). Nonetheless, the incorporation of the triggering contributions from small magnitude detections of the enhanced catalogues (usually referred to as ‘secondary triggering’ effects) was clearly beneficial for both types of forecasts, at least until fault lengths typical of M~2 source events.

By means of targeted sensitivity tests, researchers clarified the reasons behind such results. First, it turned out that the typical spatial discretizations of forecast experiments (≥ 2 km) hamper the ability of models to capture the highly localized (i.e., sub-kilometric) secondary triggering patterns revealed by the new generation catalogues. Second, seismic catalogues resulting from different workflows present remarkable differences, such as magnitude inconsistencies or different location uncertainties, even at moderate magnitudes that might only be reflected in models by ad hoc parameter calibrations. Third, the current likelihood-based validation metrics revealed to be extremely susceptible to the choice of input (learning) and target (to be forecast) seismicity and to the extent and resolution of the grid used to evaluate models.

The results of these experiments are a first important step towards understanding how to improve the design of future earthquake forecast protocols, especially once these will exploit next-generation seismic catalogues in operational mode, as well as of the most appropriate methods to evaluate their skills.

On the use of high-resolution and deep-learning seismic catalogs for short-term earthquake forecasts: Potential benefits and current limitations

Mancini, S., Segou, M., Werner, M. J., Parsons, T., Beroza, G., & Chiaraluce, L. (2022). Journal of Geophysical Research: Solid Earth. https://doi.org/10.1029/2022JB025202

A comprehensive suite of earthquake catalogues for the 2016-2017 Central Italy seismic sequence

Scientific Data. https://doi.org/10.1038/s41597-022-01827-z

2022-11-02

Co-producing knowledge – the RISE Early Career Scientist workshop

From October 26 to 28, the early career scientists (ECS) and senior scientists of (not only) RISE, explored four cross-disciplinary topics: open science, ethical implications, dynamic risk, and transdisciplinarity. To address the objectives of RISE, the workshop focused on “Bringing research to practical applications that increase society’s earthquake resilience”.

The ECS learned that the four topics are strongly connected and affect all of them. For example, dynamic risk services/products like operational earthquake forecasting (OEF) or rapid impact assessments (RIA) are only possible if the underlying data are openly available and continuously updated. In this regard, standardization is key to ensure that the same data can be used for various (dynamic risk) services/products simultaneously (e.g., rapid earthquake information for OEF and RIA), making them more sustainable.

It became very evident that transdisciplinary research is needed to facilitate the collaboration between the disciplines that are involved in developing the services/products and to tailor those to the end-users’ needs. But the wide spectrum of stakeholders within the scientific community and the society challenges transdisciplinary research. Moreover, different situations, e.g., during the evolution of a seismic crisis, require different collaboration formats between scientists from various disciplines and different tools to involve societal stakeholders. Despite these difficulties, transdisciplinary efforts are the only way to create effective products/services for our end-users and, consequently, increase society’s resilience.

The ethics group of the workshop developed a decision tree to deduce possible actions for various problems that have ethical implications. If the ethical correctness is consensual, then the solutions are explicit, such as enforcing open science to avoid biases in publishing and handling data/results. But ethically subjective actions first require defining a consensus, for instance by letting experts vote or consider arguments. However, finding a consensus is not trivial: is 70% agreement on releasing OEF information to the public enough to actually do it? The workshop further allowed to establish and strengthen the connections between us, especially among ECSs. The workshop ended with a statement made by one senior: “You [ECS] are the next generation of scientists and, thus, can change the future.”

2022-10-13

Earthquake early warning to handle dynamic risk

Early warning systems can support taking rapid protective action to minimize the harm to people, assets, and livelihoods. In the RISE project, earthquake early warning (EEW) count as one of the most relevant concepts to handle dynamic risk to which earthquakes belong. To mark the International Day for Disaster Risk Reduction (IDDRR) with this year's focus on "Early warning and early action for all", we are spotlighting one of RISE's research efforts dealing with EEW systems in more detail.

EEW systems aim to rapidly detect earthquakes and provide alerts to affected areas so that people can take protective actions on the spot (e.g., “Drop-cover-hold on”) prior to the onset of strong ground shaking. To this end, it is crucial that the general public is trained to respond quickly and appropriately. Further, EEW systems can be used to protect vulnerable infrastructures using automated shutdown procedures. As the earthquake has already begun, EEW is not a prediction. In most cases, EEW can only provide up to tens of seconds of warning time depending on how distant the earthquake is, how quickly the seismic sensors measure it, and how rapidly people are alerted and respond. Based on a large synthetic earthquake catalogue that samples the expected earthquake rate in space and time, Böse et al. (2022) developed a framework to determine statistics of fatalities, injuries and warning times. This framework allows for assessing better the performance of a planned EEW system. For example, warning times can be extended by station network densification; however seismic equipment is expensive. Therefore, the work of Böse et al. (2022) includes an approach to determine optimal locations for a minimum number of new sensors to provide the best EEW performance for damaging earthquakes. In a second paper, Papadopoulos et al. (under review) extended this framework to evaluate the effectiveness of EEW in reducing casualty risk. This work considers, among others, delays in human response to warnings. The proposed framework can be applied to all countries around the world that are considering building an EEW system and will help them to better evaluate the expected benefits.

Additionally, RISE evaluates the potential of crowdsourced EEW by using smartphone apps and low-cost sensors in collaboration with the Earthquake Network initiative, as well as improving EEW-related communication and alert messages. The RISE project thus covers a wide range of research on EEW systems. Here are some interesting RISE publications and presentations that are worth reading:

- Bossu, R., Finazzi, F., Steed, R., Fallou, L., Bondár, I. (2022). 'Shaking in 5 seconds!' Performance and user appreciation assessment of the earthquake network smartphone‐based public earthquake early warning system. Seismological Research Letters; https://doi.org/10.1785/0220210180

- Böse, M., A. N. Papadopoulos, L. Danciu, J. F. Clinton, and S. Wiemer (2022). Loss-based Performance Assessment and Seismic Network Optimization for Earthquake Early Warning, Bull. Seismol. Soc. Am. 112 (3): 1662–1677, https://doi.org/10.1785/0120210298.

- Dallo, I., Marti, M., Clinton, J., Böse, M., Massin, F., & Zaugg, S. (2022). Earthquake early warning in countries where damaging earthquakes only occur every 50 to 150 years – the societal perspective. Under Review in International Journal of Disaster Risk Reduction ➝ related presentation

- Fallou, L., Finazzi, F., Bossu, R. (2021). Efficacy and Usefulness of an Independent Public Earthquake Early Warning System: A Case Study – The Earthquake Network Initiative in Peru. Seismological Research Letters; doi: 10.1785/0220210233

- Finazzi, F., Bondár, I., Bossu, R., Steed, R. (2022). A Probabilistic Framework for Modeling the Detection Capability of Smartphone Networks in Earthquake Early Warning. Seismological Research Letters 2022; doi: https://doi.org/10.1785/0220220213

- Iaccarino, A.G., Gueguen, P., Picozzi, M.,and Ghimire, S. (2021). Earthquake Early Warning System for Structural Drift Prediction Using Machine Learning and Linear Regressors. Front. Earth Sci. 9:666444. doi: https://doi.org/10.3389/feart.2021.666444

- Finazzi, F. (2020). The Earthquake Network Project: A Platform for Earthquake Early Warning, Rapid Impact Assessment, and Search and Rescue. Front. Earth Sci., 8, 243, doi: 10.3389/feart.2020.00243

- Papadopoulos A. N., M. Böse, L. Danciu, J. F. Clinton, and S. Wiemer. A Framework to Quantify the Effectiveness of Earthquake Early Warning in Mitigating Seismic Risk, Earthquake Spectra (Under review)

2022-08-03

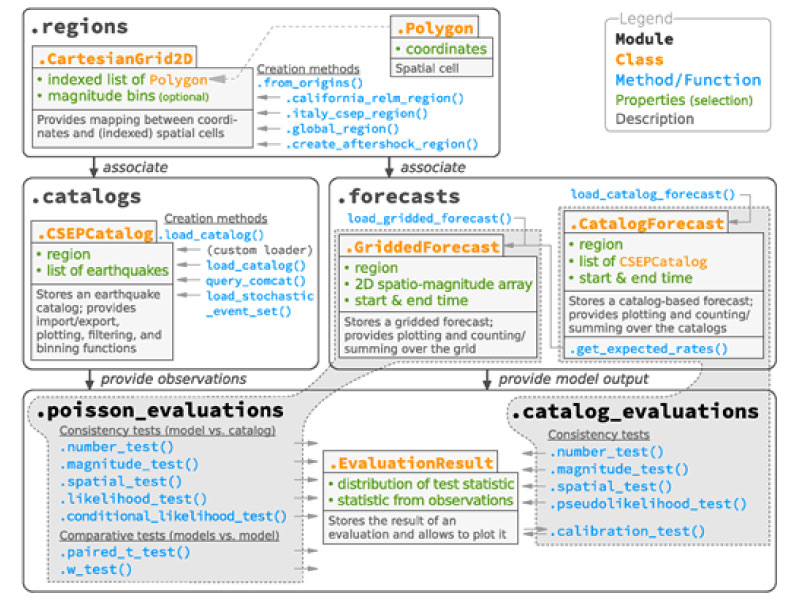

The Python pyCSEP toolkit for earthquake forecast developers

The RISE WP7 testing group and collaborators, led by Bill Savran from the Southern California Earthquake Center (SCEC), published a research article describing the Python package pyCSEP: a toolkit for earthquake forecast developers. pyCSEP provides open‐source and community-based implementations of useful tools for evaluating probabilistic and simulation-based earthquake forecasts. It also includes earthquake catalogue access and filtering, evaluation methods and visualisation routines.

To showcase how pyCSEP can be used to evaluate earthquake forecasts, the RISE WP7 group has provided a reproducibility package that contains all the components required to re‐create the figures published in their paper about the pyCSEP toolkit. Thus, the accompanying reproducibility package implements the open science strategy of the Collaboratory for the Study of Earthquake Predictability's (CSEP) and provides readers with a worked tutorial of the software.

The Collaboratory for the Study of Earthquake Predictability (CSEP) is an open and global community whose mission is to accelerate earthquake predictability research through rigorous testing of probabilistic earthquake forecast models and prediction algorithms. pyCSEP supports this mission by providing open‐source implementations of useful tools for evaluating earthquake forecasts.

Research article: pyCSEP: A Python Toolkit for Earthquake Forecast Developers

William H. Savran, José A. Bayona, Pablo Iturrieta, Khawaja M. Asim, Han Bao, Kirsty Bayliss, Marcus Herrmann, Danijel Schorlemmer, Philip J. Maechling, Maximilian J. Werner; pyCSEP: A Python Toolkit for Earthquake Forecast Developers. Seismological Research Letters 2022; doi: https://doi.org/10.1785/0220220033

Reproducibility Package for pyCSEP:

Savran, William H., Bayona, José A., Iturrieta, Pablo, Khawaja, Asim M., Bao, Han, Bayliss, Kirsty, Herrmann, Marcus, Danijel Schorlemmer, Maechling, Philip J., & Werner, Maximilian J. (2022). Reproducibility Package for pyCSEP: A Toolkit for Earthquake Forecast Developers (v1.0.0). Zenodo. https://doi.org/10.5281/zenodo.6626265

2022-07-26

How to fight earthquake misinformation?

Misinformation has always existed in the form of rumours, conspiracies or malicious gossip in all countries around the world. What has been learnt from the previous events mentioned above is that news agencies can support governmental institutions to fight misinformation (Kwanda & Lin, 2020); an authoritative voice can reduce anxiety in Twitter communities (Oh et al., 2010); and the public debates have to be better monitored by responsible authorities to be able to immediately react when misinformation is spread (Arora, 2021; Lacassin et al., 2019). Nonetheless, new communication channels have amplified misinformation to a new level, allowing more people to share such information very easily and rapidly with an enormous audience. What can be done to fight earthquake misinformation and specifically to address the most common earthquake myths?

Based on the anecdotal evidence supported by previous earthquake crises, RISE members Laure Fallou, Michèle Marti, Irina Dallo, and Marina Corradini went looking for prevalent misbeliefs in earthquake seismology by conducting a literature review and interviews with selected experts. This allowed them to set the field and identify the most common misbeliefs these experts dealt with in their interactions with non-experts. The authors then assessed the scientific consensus on the accuracy of these misbeliefs by conducting a global online survey with 167 scientists. The results show that earthquake misinformation can often be traced back to a set of common myths, which can be categorized as follows: creating earthquakes, predicting earthquakes, and earthquakes related to climate or weather phenomena. The four researchers used these insights and our expertise to compile a communication guide that can help institutions, practitioners, and other actors communicating earthquake information to prevent and fight misinformation.

Despite focusing on earthquakes, the described design processes and the main recommendations are well applicable to other hazard domains. Preventing and fighting misinformation require in any case a good understanding of the needs and concerns of different target audiences, a communication strategy, trained professionals, and proactive communications before, during, and after crises. Only then can we support society best in managing crises.

→ Find out more in the communication guide, which is available in English and Spanish.

→ ¿Cómo enfrentar la información falsa sobre terremotos? La guía de comunicacíon también está disponible en español.

How to Fight Earthquake Misinformation: A Communication Guide.

Fallou, L., Marti, M., Dallo, I., Corradini, M.

Seismological Research Letters 2022; doi: https://doi.org/10.1785/0220220086

2022-06-30

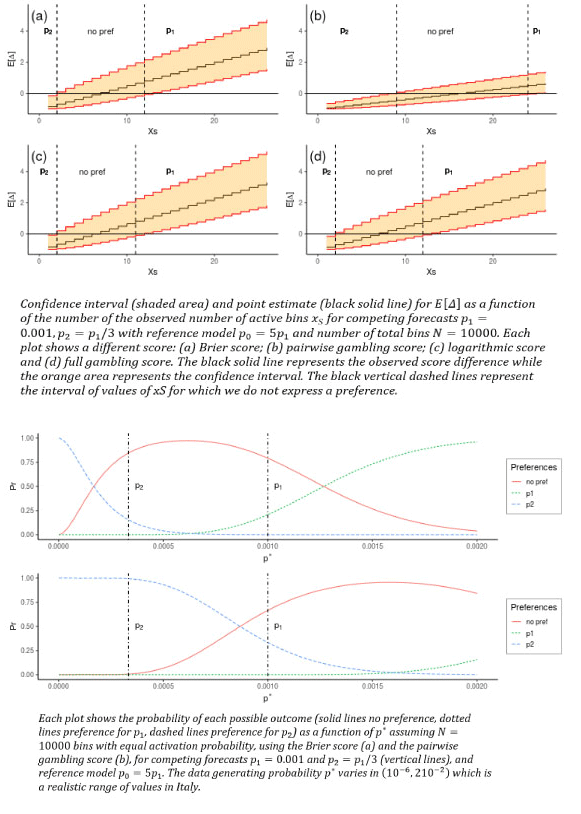

Ranking earthquake forecasts using proper scoring rules: binary events in a low probability environment

A research group from the University of Edinburgh and the University of Bristol (Serafini et al., 2022) has recently published a new paper dealing with ranking earthquake forecasts by using proper scoring rules. Operational earthquake forecasting for risk management and communication during seismic sequences depends on our ability to select an optimal forecasting model. To do this, the researchers need to compare the performance of competing models in prospective experiments, and to rank their performance according to the outcome using a fair, reproducible and reliable method. It is crucial that the metrics used to rank the competing forecasts are ‘proper’, meaning that, on average, they prefer the data generating model.

→ The authors prove that the Parimutuel Gambling score, proposed, and in some cases applied, as a metric for comparing probabilistic seismicity forecasts, is in general ‘improper’. In the special case where it is proper, we show it can still be used improperly.

→ Furthermore, they demonstrate the conclusions both analytically and graphically providing a set of simulation based techniques that can be used to assess if a score is proper or not.

→ The researchers compare the Parimutuel Gambling score’s performance with two commonly used proper scores (the Brier and logarithmic scores) using confidence intervals to account for the uncertainty around the observed score difference.

→ They also suggest that using confidence intervals enables a rigorous approach to distinguish between the predictive skills of candidate forecasts, in addition to their rankings.

In general, this work provides a framework to check if (and when) a score is proper, and to explore and compare the capabilities of different scores in distinguishing between competing forecasts with a focus on the penalty applied by different scores.

Ranking earthquake forecasts using proper scoring rules: binary events in a low probability environment

Serafini, F., Naylor, M., Lindgren, F., Werner, M.J, Main, I.

Geophysical Journal International, Volume 230, Issue 2, September 2022, Pages 1419–1440, https://doi.org/10.1093/gji/ggac124

2022-05-18

RISE Annual Meeting in Florence

The RISE annual meeting took place from 11-13 May in Italy. After the last time the RISE community met in person at the kick-off meeting in 2019, this conference took on a special significance. For many, it was a great reunion after more than two years and, for others, the possibility to finally meet in person. It was an intense 2.5 days in Florence with many presentations from the different work packages, discussion rounds, interactive sessions, and a poster fair. In addition to the official programme, the joint coffee breaks, lunches, and dinners also provided great opportunities for a lively exchange. A magnitude 3.7 earthquake, which we all felt during the conference dinner, was an exciting experience in real-time. A meeting that we will not forget so soon!

2022-04-28

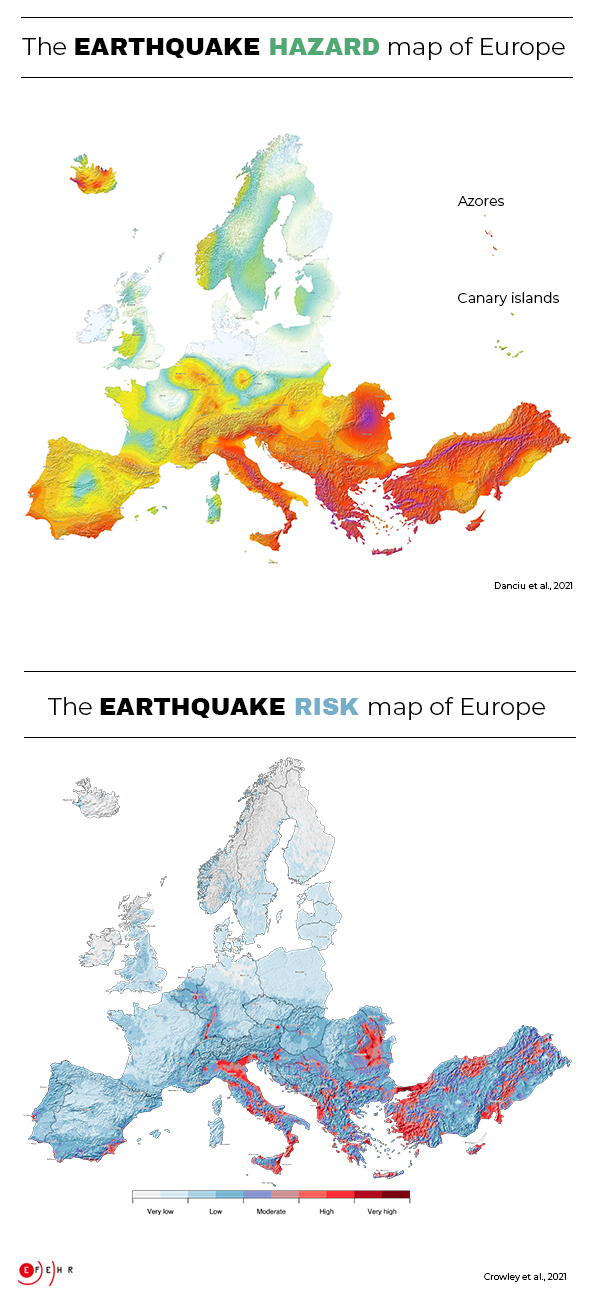

New earthquake assessments available to strengthen preparedness in Europe

During the 20th century, earthquakes in Europe accounted for more than 200,000 deaths and over 250 billion Euros in losses. Comprehensive earthquake hazard and risk assessments are crucial to reducing the effects of catastrophic earthquakes (EM-DAT). The newly released update of the earthquake hazard model and the first earthquake risk model for Europe are the basis for establishing mitigation measures and making communities more resilient. They significantly improve the understanding of where strong shaking is most likely to occur and what effects future earthquakes in Europe will have. The development of these models was a joint effort of seismologists, geologists, and engineers across Europe. The research has been funded by the European Union’s Horizon 2020 research and innovation programme within the projects RISE, SERA and EPOS-IP.

Earthquakes cannot be prevented nor precisely predicted, but efficient mitigation measures informed by earthquake hazard and risk models can significantly reduce their impacts. The 2020 European Seismic Hazard and Risk Models offer comparable information on the spatial distribution of expected levels of ground shaking due to earthquakes, their frequency as well as their potential impact on the built environment and on people’s wellbeing. To this aim, all underlying datasets have been updated and harmonised – a complex undertaking given the vast amount of data and highly diverse tectonic settings in Europe. Such an approach is crucial to establish effective transnational disaster mitigation strategies that support the definition of insurance policies or up-to-date building codes at a European level (e.g. Eurocode 8 ) and at national levels. In Europe, Eurocode 8 defines the standards recommended for earthquake-resistant construction and retrofitting buildings and structures to limit the impact due to earthquakes. Open access is provided to both, the European Seismic Hazard and Risk Models, including various initial components such as input datasets.

The updated earthquake hazard model benefits from advanced datasets

Earthquake hazard describes potential ground shaking due to future earthquakes and is based on knowledge about past earthquakes, geology, tectonics, and local site conditions at any given location across Europe. The 2020 European Seismic Hazard Model (ESHM20) replaces the previous model of 2013.

The advanced datasets incorporated into the new version of the model have led to a more com-prehensive assessment. In consequence, ground shaking estimates have been adjusted, resulting in lower estimates in most parts of Europe. With the exception of some regions in western Turkey, Greece, Albania, Romania, southern Spain, and southern Portugal where higher ground shaking estimates are observed. The updated model also confirms that Turkey, Greece, Albania, Italy, and Romania are the countries with the highest earthquake hazard in Europe, followed by the other Balkan countries. But even in regions with low or moderate ground shaking estimates, damaging earthquakes can occur at any time.

Furthermore, specific hazard maps from Europe’s updated earthquake hazard model will serve for the first time as an informative annex for the second generation of the Eurocode 8. Eurocode 8 standards are an important reference to which national models may refer. Such models, when available, provide authoritative information to inform national, regional, and local decisions related to developing seismic design codes and risk mitigation strategies. Integrating earthquake hazard models in specific seismic design codes helps ensure that buildings respond appropriately to earthquakes. These efforts thus contribute to better protect European citizens from earthquakes.

Main drivers of the earthquake risk are older buildings, high earthquake hazard, and urban areas

Earthquake risk describes the estimated economic and humanitarian consequences of potential earthquakes. In order to determine the earthquake risk, information on local soil conditions, the density of buildings and people (exposure), the vulnerability of the built environment, and robust earthquake hazard assessments are needed. According to the 2020 European Seismic Risk Model (ESRM20), buildings constructed before the 1980s, urban areas, and high earthquake hazard estimates mainly drive the earthquake risk.

Although most European countries have recent design codes and standards that ensure adequate protection from earthquakes, many older unreinforced or insufficiently reinforced buildings still exist, posing a high risk for their inhabitants. The highest earthquake risk accumulates in urban areas, such as the cities of Istanbul and Izmir in Turkey, Catania and Naples in Italy, Bucharest in Romania, and Athens in Greece, many of which have a history of damaging earthquakes. In fact, these four countries alone experience almost 80% of the modelled average annual economic loss of 7 billion Euros due to earthquakes in Europe. However, also cities like Zagreb (Croatia), Tirana (Albania), Sofia (Bulgaria), Lisbon (Portugal), Brussels (Belgium), and Basel (Switzerland) have an above-average level of earthquake risk compared to less exposed cities, such as Berlin (Germany), London (UK), or Paris (France).

Data access

The hazard.EFEHR and risk.EFEHR web platforms provide access to interactive tools such as seismic hazard and risk models, products and information. Distributed data, models, products and information are based on research projects carried out by academic and public organisations.

The scientific data is accessible on the publicly available EFEHR GitLab repository for free download.

Except where otherwise noted, all ESHM20 and ESRM20 data and scientific products are released under the Creative Commons BY 4.0 license. These products can therefore be used or private, scientific, commercial and non-commercial purposes, provided adequate citation is added.

Discover more at www.efehr.org.

Reference

Danciu L., Nandan S., Reyes C., Basili R., Weatherill G., Beauval C., Rovida A., Vilanova S., Sesetyan K., Bard P-Y., Cotton F., Wiemer S., Giardini D. (2021) The 2020 update of the European Seismic Hazard Model: Model Overview. EFEHR Technical Report 001, v1.0.0, https://doi.org/10.12686/a15

Crowley H., Dabbeek J., Despotaki V., Rodrigues D., Martins L., Silva V., Romão, X., Pereira N., Weatherill G. and Danciu L. (2021) European Seismic Risk Model (ESRM20), EFEHR Technical Report 002, V1.0.0, 84 pp, https://doi.org/10.7414/EUC-EFEHR-TR002-ESRM20

2022-03-08

Prospective evaluation of multiplicative hybrid earthquake forecasting models in California

Toño Bayona and Max Werner (University of Bristol), in collaboration with Bill Savran (Southern California Earthquake Center) and Dave Rhoades (GNS Science New Zealand), prospectively evaluated the abilities of sixteen multiplicative hybrid and six “single” seismicity models to forecast M>5 earthquakes in California over the past decade. This prospective evaluation tested models developed before 2011 against earthquakes that have occurred since then. Among others, single models use past earthquake records, tectonic data, and interseismic strain rates to formalise multiple hypotheses about the seismogenesis, while multiplicative hybrids combine these models / geophysical datasets to potentially gain predictive skill.

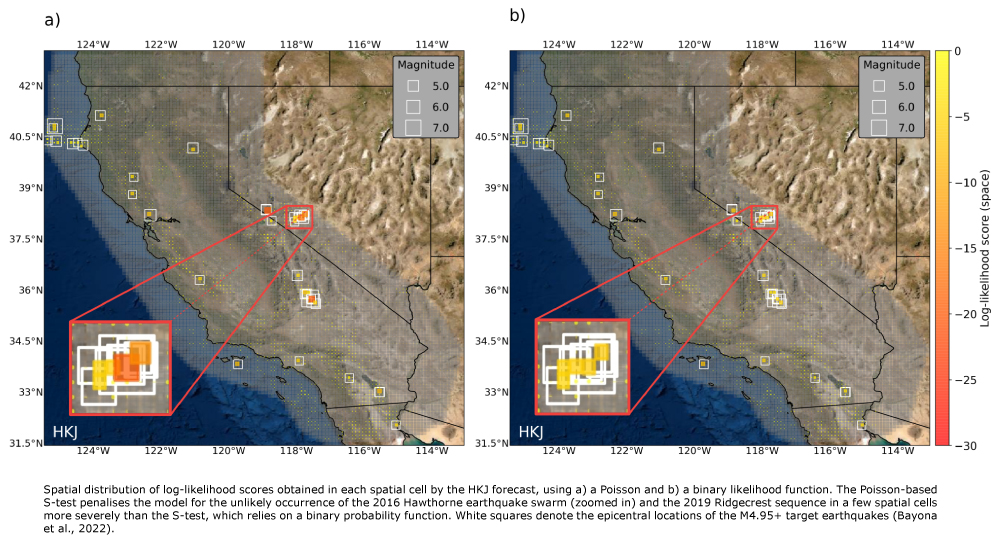

This evaluation, now published in Geophysical Journal International, involves a set of traditional and new tests implemented in the pyCSEP toolkit of the Collaboratory for the Study of Earthquake Predictability (CSEP), which rely on a Poisson and a binary likelihood distribution. Until recently, CSEP tests were mainly based on a probability function that approximates earthquakes as independent and Poisson-distributed. However, the Poisson distribution is known to insufficiently capture the spatiotemporal variability of earthquakes, especially in the presence of clusters of seismicity. Therefore, this research introduces a new binary likelihood and associated tests to reduce the sensitivity of traditional CSEP evaluations to clustering.

The consistency test results show that most forecasting models overestimate the number of earthquakes and struggle to explain the spatial distribution of the observed data. Furthermore, the comparative test results show that, contrary to retrospective analyses, none of the hybrid models are significantly more informative than a model (HKJ) that adaptively smooths the epicentral locations of small earthquakes, suggesting that small-magnitude seismicity is statistically useful for mapping the locations of future larger events in California.

This investigation elevates the international standard for transparent, reproducible, and prospective CSEP earthquake forecasting experiments, as it includes a publicly available software reproducibility package to fully replicate its scientific results. This novel open science practice includes a data repository containing forecast files and earthquake catalogue freely accessible in Zenodo and documented code openly available on Github. This case study is now shortlisted for the University of Bristol Open Research Prize 2021, which is an effort to support open research in all disciplines across the university and the final winners will be announced in the coming weeks.

More detailed information can be found in Bayona, J.A., Savran, W.H., Rhoades, D.A. and Werner, M.J., 2022. Prospective evaluation of multiplicative hybrid earthquake forecasting models in California. Geophysical Journal International, 229(3), 1736-1753.

2021-12-15

How well does poissonian probabilistic seismic hazard assessment (PSHA) approximate the simulated hazard of epidemic‐type earthquake sequences?

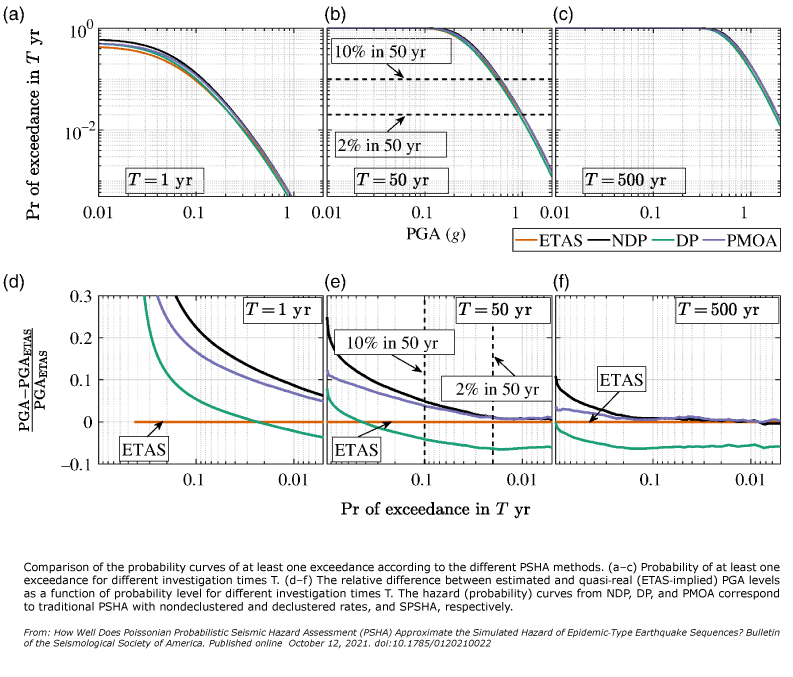

Shaoqing Wang and Max Werner from the University of Bristol published new research that challenges basic assumptions of standard seismic hazard assessment. Classical Poissonian probabilistic seismic hazard assessment (PSHA) relies on the rate of independent mainshocks to estimate future seismic hazard levels. Real earthquakes, however, cluster in time and space. This article shows that classical PSHA only poorly approximates hazard levels implied by models of more realistic clustered seismicity, such as the Epidemic Type Aftershock Sequences (ETAS) model.

The work also compared ETAS-implied hazard with Sequence-based PSHA (SPSHA) and found much better agreement, although none of the approximate methods could reproduce the multiple exceedance curves implied by the ETAS model. This suggests that cumulative seismic risk assessment with damage‐dependent fragility curves might need to consider ETAS‐like sequences.

Shaoqing Wang and Max Werner also investigated the relation between short‐term (conditional) and long‐term hazard and proposed a hazard analog of the Omori-Utsu and Utsu-Seki scaling laws as a function of initial magnitude, elapsed time, and long‐term hazard. This model can provide a quick prediction of ensemble‐averaged short‐term hazard and the time needed for the hazard levels to return to the long‐term average.

The authors conclude that realistic multigenerational earthquake clustering has both obvious and more subtle effects on long‐ and short‐term hazard and should be considered in refined hazard assessments.

How Well Does Poissonian Probabilistic Seismic Hazard Assessment (PSHA) Approximate the Simulated Hazard of Epidemic‐Type Earthquake Sequences? Shaoqing Wang; Maximilian J. Werner; Ruifang Yu. Bulletin of the Seismological Society of America (2021); https://doi.org/10.1785/0120210022

2021-10-08

A closer look #4: A solidarity-based earthquake early warning system

Can citizens play a role in real-time earthquake monitoring and thus contribute to developing earthquake early warning systems? This question was partially answered back in 2009 when the Quake-Catcher Network (QCN) initiative showed the usefulness of dense networks of low-cost sensors in detecting and measuring earthquakes. However, QCN was restricted to the most enthusiastic citizens willing to buy and install the sensor in their homes.

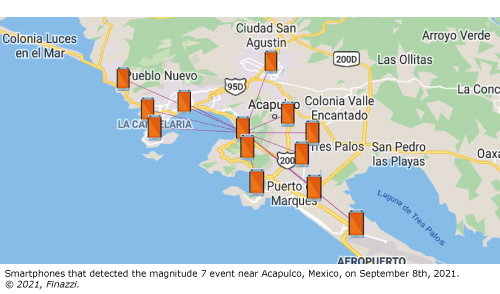

Things radically changed with the smartphone revolution. Smartphones have everything needed to implement a low-cost monitoring network, and to join the network requires only a few clicks. If you are a seismologist, you would argue that smartphones are not secured to the ground (why would they be) and mainly record noise. True. Nonetheless, many smartphones connected to a network can offer reliable real-time detections and provide rapid preliminary estimates of the earthquake parameters.

All of this was first achieved in 2013 by the Earthquake Network (EQN) initiative (www.sismo.app), which implements a public smartphone-based earthquake early warning system. Citizens join the project by installing the EQN app. Through the same app, they receive early warnings a couple of seconds before the shakings occur at their location. The app immediately became popular in Central and South America, where people experience earthquakes every other day. Also, EQN was helpful to Nepalese citizens during the aftershocks of the April 2015 earthquake, which resulted in nearly 9,000 deaths. For a long time, EQN was simply recognised as a citizen science experiment. Only recently, it caught the attention of seismologists and turned into a research project.

Today, scientists of the EU-funded RISE and TURNkey projects are giving “seismological meaning” to the detections EQN has recorded throughout its history. Furthermore, they found that the network is indeed capable of sending early warnings for damaging shaking levels. For instance, the recent magnitude 7 earthquake on 8 September 2021, with its epicentre near Acapulco, Mexico, was detected after 5 seconds from origin time. The EQN app sent an early warning message about 10 seconds before the strong tremors occurred to people living in the nearest large city (Chilpancingo) and 55 seconds to people in Mexico City.

Whether citizens are aware or not, EQN is based on a strong solidarity principle. Smartphones detecting the earthquake are usually very close to the epicentre, where an early warning is not possible. If your smartphone is making a detection, the early warning will be useful for someone who lives a little further away from the epicentre, but not for you. However, you can expect the favour to be returned. Thanks to this spirit of collaboration, EQN has continuously grown, and new users worldwide join the network every day. Up to now, the app counts more than 8 million downloads and 1.5 million active participants globally, and EQN is for many countries the sole and only early warning system citizens can rely on.

While the number of smartphones in the network is increasing, new challenges need to be addressed. The EU-funded Horizon 2020 projects RISE and TURNkey are the perfect environment to study and implement new solutions, with EQN possibly interacting with classical monitoring networks to provide a more robust and faster early warning service across Europe and all over the world.

Read more about the EQN Project:

-

Bossu, R., Finazzi, F., Steed, R., Fallou, L., Bondár, I. (2021) "'Shaking in 5 Seconds!' Performance and User Appreciation Assessment of the Earthquake Network Smartphone‐Based Public Earthquake Early Warning" System. Seismological Research Letters; https://doi.org/10.1785/0220210180

-

Finazzi F. (2020) The Earthquake Network Project: A Platform for Earthquake Early Warning, Rapid Impact Assessment, and Search and Rescue. Front. Earth Sci. 8:243. doi: 10.3389/feart.2020.00243

2021-07-09

What happens when artificial seismicity is mixed with natural one?

Every day, beyond tectonic events, seismic networks detect several non-natural quakes: among them, quarry and mine blasts are the most numerous anthropogenic recorded events. Such events are often not identified and thus collected together with tectonic events. Unluckily, most statistical seismologists ignore or underestimate the presence of explosions and quarry blasts in seismic catalogues.

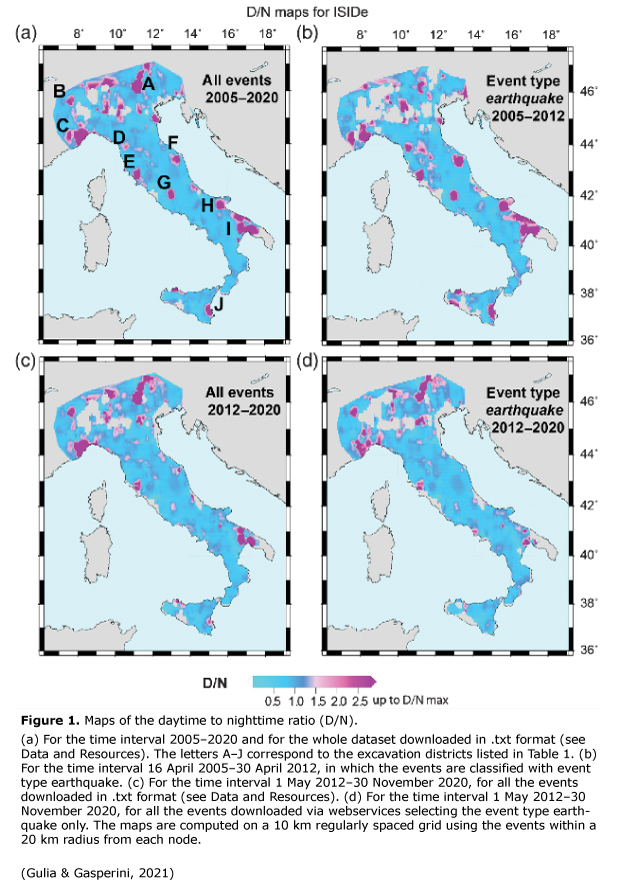

In their latest research article, RISE members Laura Gulia and Paolo Gasperini use the example of the Italian Seismological Instrumental and Parametric Database (ISIDe) to show what happens when artificial seismicity is mixed with natural.

Having low magnitudes, the artificial events enrich the number of small earthquakes in a catalogue, contaminating the natural signals and seismicity datasets, adulterating the relative portion of micro-seismicity with respect to the higher magnitudes. Blasts misclassified as natural earthquakes, indeed, may artificially alter the seismicity rates and then the b-value of the Gutenberg and Richter relationship, an essential ingredient of several forecasting models.

To identify the quarry blasts, the authors mapped the ratio between the daytime to nighttime events (D/N), introduced by Wiemer and Baer in 2000. Since quarry blasts are performed during the day, the presence of non-natural events increases this ratio. An indicative value of anomalous D/N is about 1.5. As shown in the figure on the left, Gulia and Gasperini illustrate in maps for Italy this difference between using a whole data set (natural and non-natural earthquakes) and selecting the earthquake event type only. Their analysis reveals the presence of numerous quarry blasts in ISIDe from 16 April 2005 to 30 April 2012, misclassified as earthquakes. After 1 May 2012, there is a general improvement in identifying the event type. However, many quarry blasts are still not correctly classified.

The two researchers conclude that using a contaminated dataset reduces the statistical significance of the results and can lead to erroneous conclusions. Removing such non-natural events should therefore be the first step for a data analyst.

The research article "Contamination of Frequency–Magnitude Slope (b‐Value) by Quarry Blasts: An Example for Italy" is published here: https://doi.org/10.1785/0220210080.

Stefan Wiemer, Manfred Baer; Mapping and Removing Quarry Blast Events from Seismicity Catalogs. Bulletin of the Seismological Society of America 2000; 90 (2): 525–530. doi: https://doi.org/10.1785/0119990104

2021-04-30

Embracing Data Incompleteness for Better Earthquake Forecasting

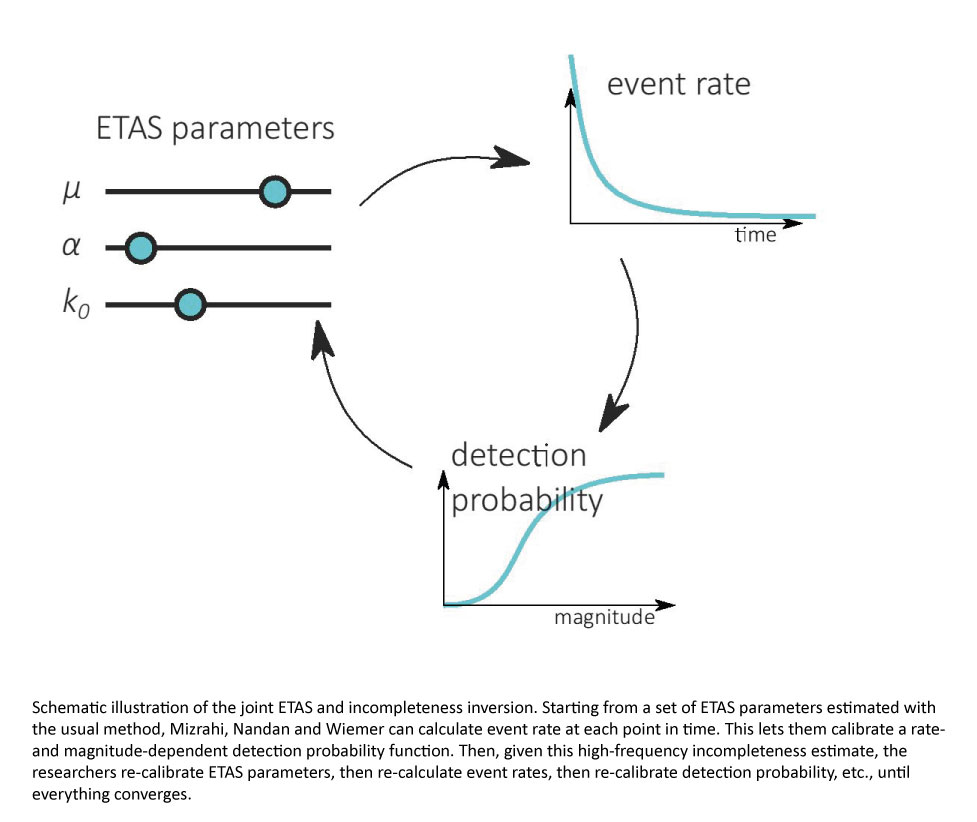

The magnitude of completeness (Mc) of an earthquake catalogue, above which all earthquakes are assumed to be detected, is of crucial importance for any statistical analysis of seismicity. Mc has been found to vary with space and time, depending in the longer term on the configuration of the seismic network, but also depending on temporarily increased seismicity rates in the form of short-term aftershock incompleteness. Most seismicity studies assume a constant Mc for the entire catalogue, enforcing a compromise between deliberately misestimating Mc and excluding large amounts of valuable data.

Epidemic-Type Aftershock Sequence (ETAS) models have been shown to be among the most successful earthquake forecasting models, both for short- and long-term hazard assessment. To be able to leverage historical data with high Mc as well as modern data, which is complete at low magnitudes, Leila Mizrahi, Shyam Nandan and Stefan Wiemer developed a method to calibrate the ETAS model when time-varying completeness magnitude Mc(t) is given. This extended calibration technique is particularly beneficial for long-term Probabilistic Seismic Hazard Assessment (PSHA), which is often based on a mixture of instrumental and historical catalogues.

In addition, the researchers designed a self-consistent algorithm which jointly estimates ETAS parameters and high-frequency detection incompleteness, to address the potential biases in parameter calibration due to short-term aftershock incompleteness. For this, they generalized the concept of Mc and consider a rate- and magnitude-dependent detection probability – embracing incompleteness instead of avoiding it.

To explore how the newly gained information from the second method affects earthquakes' predictability, Mizrahi, Nandan and Wiemer conducted pseudo-prospective forecasting experiments for California. Two features of our model are distinguished: small earthquakes are allowed and assumed to trigger aftershocks, and ETAS parameters are estimated differently. The researchers compare the forecasting performance of a model with both features and two additional models, each having one of the features to the current state-of-the-art base model.

Preliminary results suggest that their proposed model significantly outperforms the base ETAS model. They also find that the ability to include small earthquakes for the simulation of future scenarios is the main driver of the improvement. This positive effect vanishes as the difference in magnitude between newly included events and forecast target events becomes large. Mizrahi, Nandan and Wiemer think that a possible explanation for this is provided by the findings of previous studies, which indicate that earthquakes tend to preferentially trigger similarly sized aftershocks. Thereby, besides being able to better forecast relatively small events of magnitude 3.1 or above, the researcher gained a useful insight that can guide them in developing of the next, even better, earthquake forecasting models.

The paper "The Effect of Declustering on the Size Distribution of Mainshocks" is published here: https://doi.org/10.1785/0220200231

This work has received funding from the European Union’s Horizon 2020 research and innovation program under Grant Agreement Number 821115, real‐time earthquake risk reduction for a resilient Europe (RISE).

2021-03-31

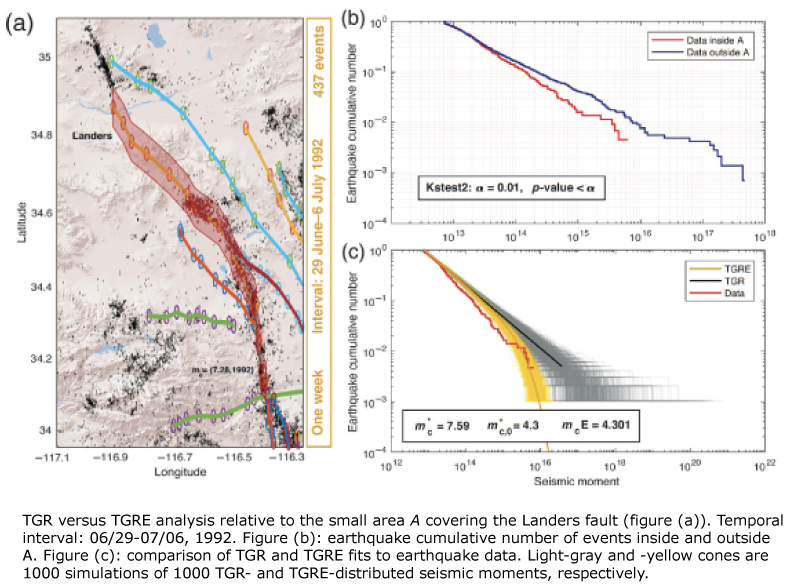

An Energy‐Dependent Earthquake Moment–Frequency Distribution

The probability of a strong shock to nucleate inside a small area A that just experienced another strong event, should be lower than the probability predicted by the tapered Gutenberg-Richter (TGR) model. This is because a lot of elastic energy has already been released in the first strong shock, and it takes time to recover to the previous state. However, this fact is not taken into account in the TGR model. Due to this limitation, RISE members Ilaria Spassiani and Warner Marzocchi propose the energy-varying TGR (TGRE) model. Here, the researchers impose the corner seismic moment to be a space-time function depending on the amount of elastic energy E currently available in a small area A. More precisely, their energy-varying corner seismic moment Mc (E) increases with the square of the time elapsed since the last resetting event, which is supposed to have reset the elastic energy in the small area A to a minimum value.

In practice, the taper of TGRE is abruptly shifted to the left just after the occurrence of a strong shock, and then it slowly recovers to the long-term value with the energy-reloading process. Spassiani and Marzocchi impose TGRE to verify an invariance condition: when considering large domains, where a single strong event cannot significantly affect the whole energy available, TGRE becomes the TGR. A sensitivity analysis also shows that the dependence of Mc (E) on its parameters is not substantial in the short-term, proving that their specific choice, as long as reasonable, cannot affect the results of any analysis involving TGRE.

To evaluate the reliability and applicability of the TGRE model, the two RISE members applied it to the Landers sequence (USA) which started with the Mw7.3 mainshock that occurred on the 28th of June 1992. First, they found out that the seismic moment-frequency distribution (MFD) close to the fault system affected by the mainshock is statistically-significantly different from that of earthquakes off-faults, showing a lower corner magnitude. This result is entirely independent of modeling, and it underlines the need for an energy-varying MFD at short spatiotemporal scales. Then, Spassiani and Marzocchi have shown that TGRE may explain well the difference in the MFDs for the Landers sequence, and that the results are stable for possible variations of its parameters. Notably, they obtained positive evidence in favour of the TGRE fit, with respect to TGR, for Landers data within one week, one month, six months and one year since the Mw7.3 mainshock (Fig. 1 shows the results for one week). Most of the times, the evidence is “substantial” and “strong” (terminology by Kass & Raftery, 1995).

The results suggest that TGRE can be profitably used in operational earthquake forecasting, as the model is simple and rooted in clearly stated assumptions. It requires some more or less explicit subjective choices. However, Spassiani and Marzocchi think they are less subjective than ignoring the empirical evidence that shows that strong triggered earthquakes do not nucleate on a fault just ruptured by another strong event. Rather than focusing on the details of the model, which is obviously not the only one possible, the RISE members mainly aim to get across the message of including an energy dependency in MFD: Spassiani and Marzocchi claim that self-organized criticality at large spatiotemporal scales changes in intermittent criticality at small space-time domains recently experiencing a significant release of energy.

This study has been recently published on BSSA:

https://doi.org/10.1785/012020190.

As future work, Spassiani and Marzocchi aim at evaluating the TGRE reliability and the comparison with alternative models through prospective tests.

This study was partially supported by the Real‐time Earthquake Risk Reduction for a Resilient Europe (RISE) project and funded by the European Union’s Horizon 2020 research and innovation program (Grant Agreement Number 821115).

2021-02-02

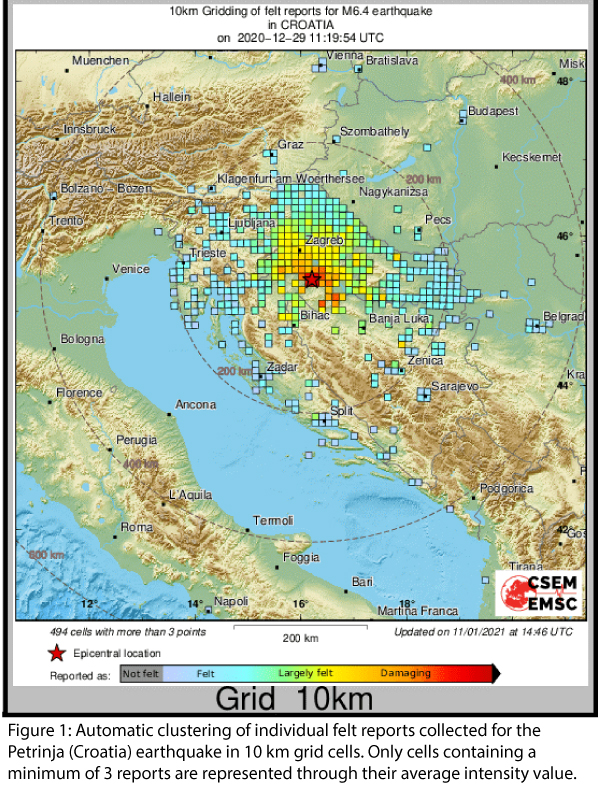

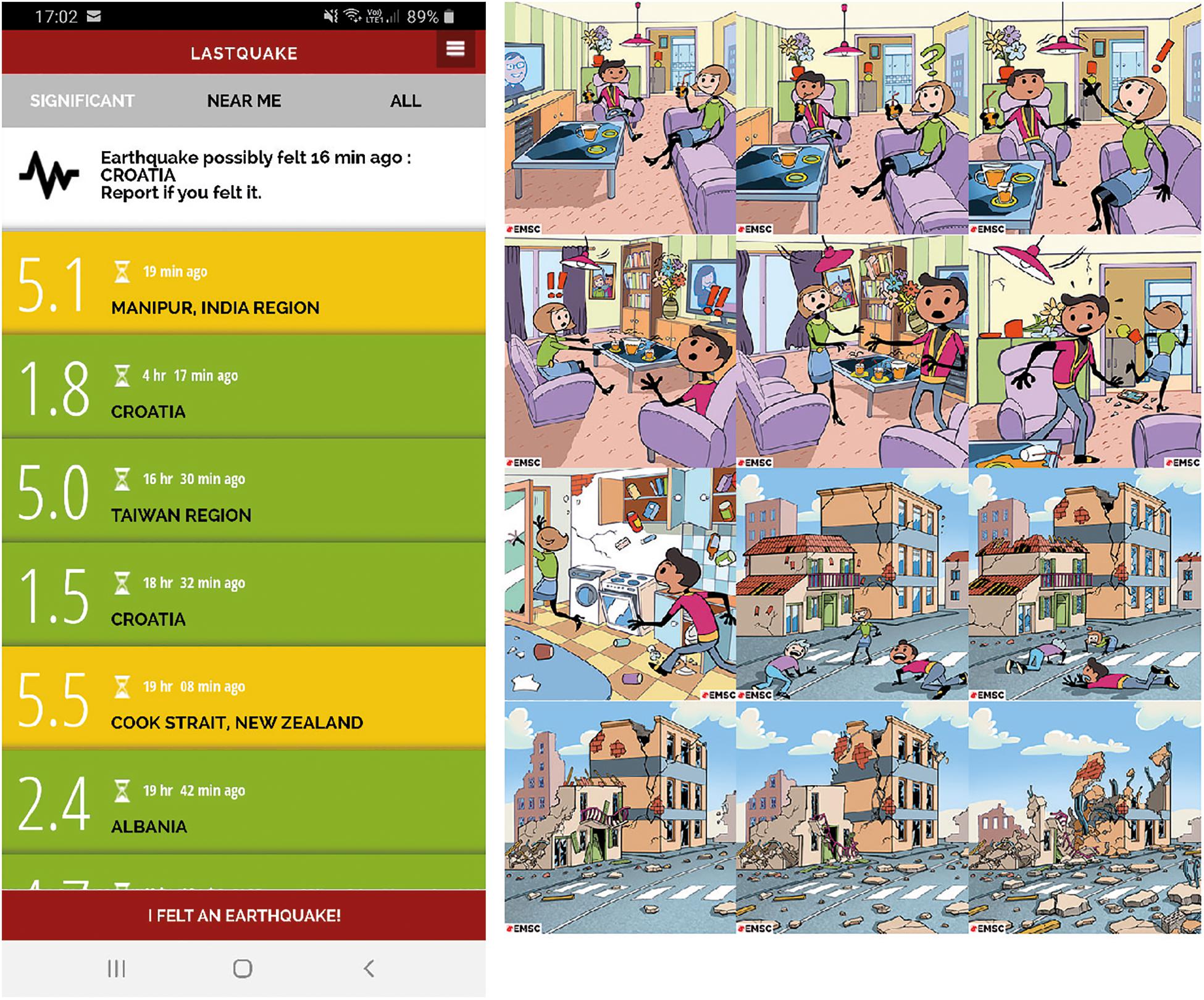

Dynamic seismic risk communication and rapid situation assessment in face of a destructive earthquake in Europe

On December 29th 2020, a strong earthquake with a magnitude of 6.4 shook the city of Petrinja, 45 km south of the capital city of Zagreb. Eight people lost their lives. In March 2020, eight months before the Petrinja event, an earthquake with a magnitude 5.3 damaged the capital Zagreb to a similar extend. Due to the Zagreb earthquake and its aftershocks, the LastQuake app developed at the Euro-Mediterranean Seismological Centre (EMSC) had a high penetration rate of 7 % in the country at the time of the Petrinja earthquake. In addition, EMSC had released a new website for mobile devices to optimise crowdsourcing of felt reports a few weeks before the Petrinja earthquake occurred. These specific circumstances made the Petrinja earthquake an ideal test case for dynamic risk communication and the rapid situation assessment tools developed by EMSC within the RISE and TURNkey projects.

Both projects aim at reducing future human and economic losses caused by earthquakes in Europe. TURNkey (Towards more Earthquake-resilient Urban Societies through a Multi-sensor-based Information System enabling Earthquake Forecasting, Early Warning and Rapid Response actions) is a project funded under the same H2020 call as RISE.

The Petrinja mainshock was detected within 66 seconds through a surge of EMSC website traffic and 11 seconds later from concomitant LastQuake app openings. These crowdsourced detections initiated a so-called “Crowdseeded seismic Location” (CsLoc). It is a method that exploits the geographical information of crowdsourced detection to select the seismic stations likely to have recorded the earthquake. The method also uses the time information to identify observed arrival times likely associated with this earthquake to perform fast and reliable seismic location. The automatic CsLoc was published 98 seconds after the earthquake and it was located 8 km away from the location that was manually reviewed afterward. EMSC received more than 2'000 felt reports in the first 10 minutes, out of a total of more than 15'000, before its servers started to experience significant delays due to the high traffic. These initial felt reports were sufficient to evaluate the epicentral intensity at VIII (for a final epicentral intensity of IX), i.e. damaging levels. Furthermore, they confirmed the heads-up from the automatic impact assessment tools "EQIA" (Earthquake Qualitative Impact Assessment). About 140 geo-located pictures were crowdsourced showing the effects of the earthquake. EMSC shared all the information on rapid situation assessment with the ARISTOTLE (All Risk Integrated System Towards Trans-boundary holistic Early-warning) group in charge of preparing an impact assessment for the European Civil Protection Unit within 3 hours of the event.

A posteriori determined rupture orientation and position from felt reports collected within the first 25 minutes using the FinDer software developed at ETH Zurich are in good agreement with fault orientation from tectonic settings and aftershock distribution. This approach will be tested further in operational conditions in the coming months.

The large volume of collected felt reports accelerated the cooperation with the United States Geological Survey (USGS) to integrate them in USGS global ShakeMaps and define common geographical clustering approaches of individual reports.

Beyond these scientific results, a significant effort was devoted to complete automatic information published on Twitter @LastQuake by answering questions and explaining the reasons for service disruptions observed after several main aftershocks occurred. All communication was performed in English. We retweeted many of the tweets from the Croatian seismological institute and referred to them as much as possible. Questions followed according to the time evolution already observed after other damaging earthquakes. First, people were interested in the expected impact, then in the possible evolution of the seismicity, in the earthquake prediction (with the traditional confusion between forecast and early warning), and finally whether human activities (in this case hydrocarbon exploitation) could have caused the tremblors. During this period which lasted about two weeks, there were also many questions about seismology, such as the meaning of the magnitude, intensity, or the reasons for magnitude discrepancies between institutes.

It is difficult to evaluate the impact of this direct public communication effort quantitatively. Due to the English, in fact, only part of the population was reached. However, there are elements that seem to indicate that Twitter users appreciated our effort. The number of tweets viewed reached 9 million the day of the mainshock, and an average of 4.8 million over the first seven days. Again, this demonstrated the strong interest of the public in receiving information after a damaging earthquake and during an aftershock sequence. Tweets explaining that earthquake prediction does not exist were liked 700 times. Many users reported that getting rapid information and direct answers to their questions was the key to decrease their anxiety. This public interest led to tens of interviews in national and regional media. But perhaps more meaningful in terms of public appreciation, EMSC collected more than 2000€ from individual donations and received many offers for technical support in relation to our service disruptions from both IT specialists and companies.

This experience also underlined the many improvements that still need to be done, from strengthening our IT infrastructure to hierarchize information during an aftershock sequence better. In general, the great interest shown by the public in Croatia in receiving information after the Petrinja event demonstrates a strong need for dynamic risk communication. Moreover, it proves that crowdsourcing can significantly improve the capacity for rapid impact assessment.

Authors: Rémy Bossu, Istvan Bondàr, Maren Böse, Jean-Marc Cheny, Marina Corradini, Laure Fallou, Sylvain Julien-Laferrière, Frédéric Massin, Matthieu Landès, Julien Roch, Frédéric Roussel and Robert Steed

2020-12-17

What is the Foreshock Traffic Light System?

Recently, Laura Gulia investigated the spatio-temporal evolution of the earthquake size distribution throughout a seismic sequence focusing on the b-value. This is a parameter characterizing the relationship between the earthquake magnitude and the number of earthquakes. She and her colleagues found out that, immediately after a mainshock, the b-value increases by 20%-30% and remains high for at least the following 5 years, reducing the chance of occurrence of a larger earthquake near the fault that originated the mainshock.

Based on their research, Laura Gulia and Stefan Wiemer developed the Foreshock Traffic Light System (FTLS), a promising tool for the mainshock and aftershock hazard assessment. In the interview with Gabriele Amato from the NH blog, Laura Gulia explains more about the thoughts behind it and future research plans. Read the full interview here.

Gulia, L., and S. Wiemer (2019). Real-time discrimination of earthquake foreshocks and aftershocks, Nature 574, 193–199. https://doi.org/10.1038/s41586-019-1606-4

2020-10-13

International Day for Disaster Risk Reduction #DRRday

The United Nations General Assembly has designated October 13th as the International Day for Disaster Risk Reduction to promote a global culture of disaster risk reduction. Disasters have a vital impact on people's lives as well as on their wellbeing. Concerning catastrophes caused by nature such as thunderstorms, landslides, or droughts, earthquakes are the deadliest natural hazard. However, many damages and losses can be avoided through effective risk reduction strategies.

In March of this year, an earthquake with a magnitude of 5.4 occurred near Zagreb (Croatia). Bricks fell from roofs, facades cracked, walls collapsed, debris damaged parked cars, and many citizens got injured. Up to now, earthquakes cannot be predicted, but there are measures and approaches for minimizing their consequences. Developing tools and measures to reduce future human and economic losses is the aim of RISE, which stands for "Real-time earthquake rIsk reduction for a reSilient Europe". RISE studies seismic risk, its changes, importance, and evolution at all stages of the risk management process. The project depicts current potentials and limits as well as advances the state of the art to reduce seismic risk in Europe and beyond.

After one year of the project, first results are becoming apparent. RISE contributes in many ways to gain knowledge, which will be beneficial to reduce further earthquake-related losses. For instance, RISE has successfully deployed a prototype array as a demonstrator in Bern (Switzerland) and designed an impulse generator, which is currently being tested in multi-storey buildings. In addition, the researcher focused on developing new and extending existing approaches to model seismicity. Some models have already demonstrated, and therefore, the project has made notable progress in the fields of earthquake forecasting. It also focused on physics-based modelling of seismicity, an evolving field. Furthermore, static and time-invariant exposure models for 45 countries and time-invariant vulnerability models representing over 500 buildings have been developed. To ensure the best possible usage of all available information for the benefit of society, RISE scientists tested different start page designs and hazard announcements representing the diversity of elements used in multi-hazard platforms and conducted workshops to understand which features of multi-hazard warning apps non-experts prefer.

Thus, RISE adopts an integrative, holistic view of risk reduction targeting the different stages of risk management. Improved technological capabilities are applied to combine and link all relevant information to enhance scientific understanding, inform societies and consequently foster Europe's resilience and beyond.

More information: https://www.undrr.org/

2020-08-21

4 new publications in "The Power of Citizen Seismology: Science and Social Impacts"

Several RISE papers (some of them co-funded by the Turnkey project) dealing with crowdsourced data and public earthquake communication have recently been published. Four papers are part of the special issue of the open-access online Frontiers journal “The Power of Citizen Seismology: Science and Social Impacts” edited by Rémy Bossu, Kate Huihsuan Chen and Wen-Tzong Liang.

These four RISE papers published in the Frontiers journal cover interactions with the public, how seismological community can benefit from such interaction for rapid situation awareness and a method to speed-up seismic locations of felt earthquakes. You can find all papers here:

- Citizen Seismology Without Seismologists? Lessons Learned From Mayotte Leading to Improved Collaboration

- Rapid Public Information and Situational Awareness After the November 26, 2019, Albania Earthquake: Lessons Learned From the LastQuake System

- Accurate Locations of Felt Earthquakes Using Crowdsource Detections

- The Earthquake Network Project: A Platform for Earthquake Early Warning, Rapid Impact Assessment, and Search and Rescue

Are you interested in more RISE publications? Here you will find the list with all our publications.

*The image for this news item is adapted from: Bossu et al. (2020), "Rapid Public Information and Situational Awareness After the November 26, 2019, Albania Earthquake: Lessons Learned From the LastQuake System"

2020-07-08

A closer look #2: What is Operational Earthquake Forecasting?

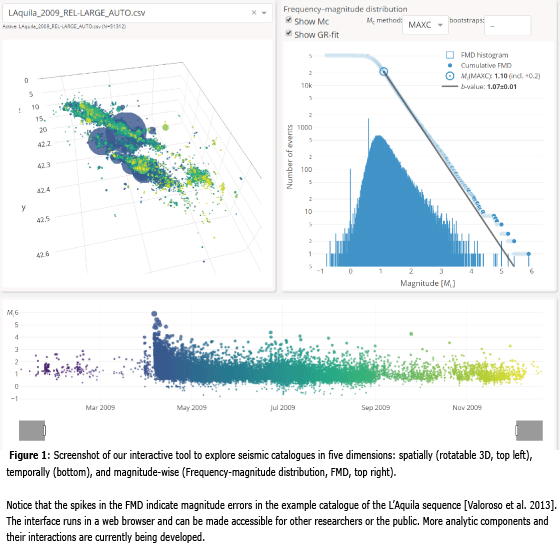

One important focus of RISE is to advance earthquake predictability research such as Operational Earthquake Forecasting. This research can benefit from the constantly evolving observational capabilities of seismic monitoring efforts, which, for instance, result in an ever-increasing amount of recorded earthquakes, especially toward smaller magnitudes. Such capabilities need to be exploited to gain more insight into the earthquake occurrence processes and, therefore, to improve earthquake forecasting.

In our first step, we explore existing high-resolution earthquake catalogues that contain events with magnitudes down to ML0 or below. We started to develop an interactive tool that will facilitate and aid us in a more intuitive analysis of seismicity in five dimensions (see Figure).

In particular, we will focus on these aspects:

- Spatio-temporal variability in the frequency-magnitude distribution: e.g., statistical analyses of event sizes could tell us more about the state of a fault system.

- Earthquake clustering properties: e.g., well-located hypocenters could reveal how earthquake sequences progress and how earthquakes are triggered.

- Foreshock analysis: e.g., earthquakes prior to a larger earthquake might share a common spatial-temporal pattern. In addition, high-resolution catalogues could potentially reveal many more sequences that have foreshocks than is currently believed.

- Limits of the current quality of earthquakes catalogs, e.g., what information are we missing?

We will adopt state-of-the-art methods (e.g., from the machine learning domain) to augment these analyses, for instance, to employ a parameter selection and search for signals and patterns that are indicative of the earthquake occurrence process.

Our findings will have an impact on improving our understanding of the earthquake occurrence process. Our gained knowledge could allow us to develop innovative earthquake forecasting models, which can be stochastic, physics-based and/or of a hybrid type. Ultimately, our advances will contribute to mitigating better the seismic risk, which will be analysed within another work package of RISE.

2020-06-18

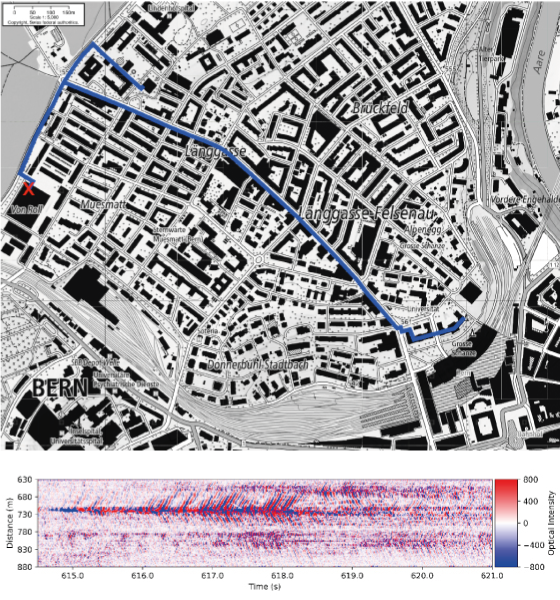

A closer look #1: Towards optical sensing of ground motion for improved seismic hazard assessment

Optical fibres are the backbone of our modern communication network. Short pulses of laser light transmit enormous amounts of data, but on their journey from sender to the receiver, they also gather information about the optical fibre itself. In fact, microscopic displacements of the fibre slightly distort the laser pulses – an effect that has recently become detectable with highly sensitive interferometers.

This emerging technology, known as Distributed Acoustic Sensing (DAS), allows us to measure ground motion excited by a large variety of sources, such as earthquakes or landslides. Harnessing existing networks of telecommunication fibres, DAS, therefore, offers the opportunity to assess and potentially mitigate natural hazards in densely populated urban areas.

To explore this opportunity, RISE researchers at ETH Zurich are conducting a pilot experiment in the Swiss capital Bern, closely collaborating with the telecommunication company SWITCH. Several connected telecommunication fibres are traversing the city in different directions along with a 6 km long path measure ground motion every two metres, in real-time, nearly 1000 times per second. Most of the observed ground motion is caused by traffic, industrial installations, and construction sites.

Though the amplitude of these signals is, fortunately, much lower than the ground motion caused by destructive earthquakes, this wealth of data can be used to infer rock properties of the upper tens to hundreds of metres of the subsurface. Knowing these properties is essential to predict the ground motion caused by potential future earthquakes.

Research on DAS in urban environments is in its infancy, within the RISE project and worldwide. Initial results are very promising, especially in terms of the quality and unprecedented spatial resolution of the data. Yet, substantial research and development are still needed to process the enormous amounts of DAS data efficiently.

2020-03-17

Detect earthquake-triggered landslides via Twitter

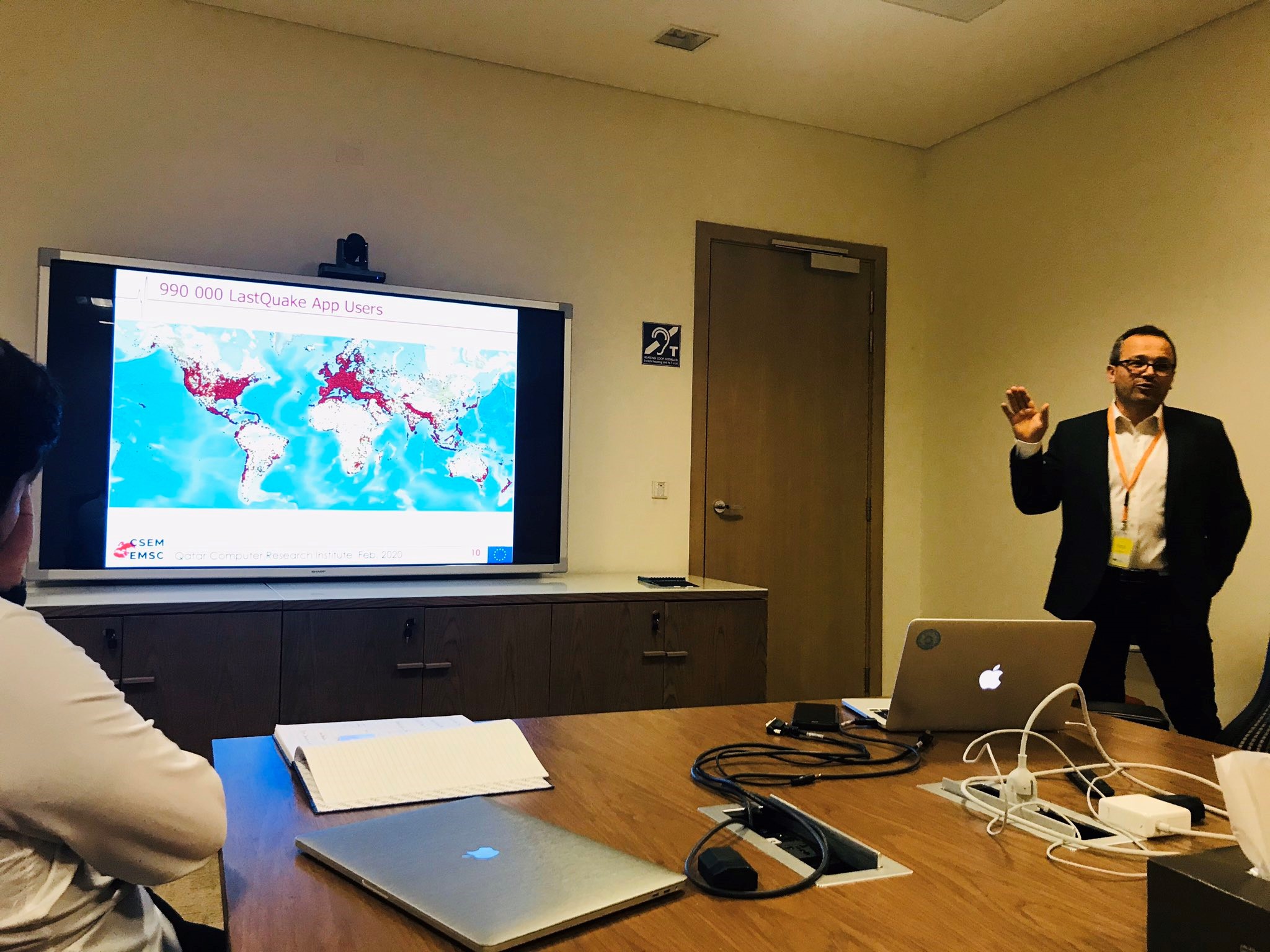

Landslides may significantly hamper earthquake response, because they can block roads. However, there is very little information about how, where and when earthquakes triggered landslides within the first few hours after a global earthquake occurred. Therefore, the European-Mediterranean Seismological Centre (EMSC) started a project in collaboration with the Qatar Computing Research Institute (QCRI) to evaluate whether it is possible to detect such landslides by monitoring tweets on Twitter in real time.

Although such detection already exists for earthquakes at EMSC, the detection of landslides presents a different challenge, as the number of tweets published on this topic is very small. In order to tackle this issue, EMSC is working together with QCRI, which has developed AIDR (Artificial Intelligence Disaster Response). This is a platform, which harvests tweets from Twitter and uses artificial intelligence (AI) to analyse the pictures. A database of landslide pictures was the first training dataset generously provided by the British Geological Survey. Further AI training is currently ongoing. Besides that, we are manually checking all detected tweets on a daily basis and hope to have an operational prototype in the coming months.

2019-09-04

Promising Kick-Off Meeting

The RISE kick-off meeting took place from 2 to 4 September 2019 in Zurich, Switzerland. Over 60 participants joined to discuss the project's first steps. Besides many interesting presentations held project members and external experts, a poster fair and breakout sessions provided enough room to be creative and to take everyone's expertise and ideas into account. During the conference dinner on a boat on Lake Zurich, the participants had the possibility to network and discuss in a different setting. Now the RISE community is ready to start with their work to make Europe more resilient.

2019-09-01

RISE kick-off meeting in Zurich

From 2 to 4 September 2019, the RISE kick-off meeting will be held in Zurich. Over 60 representatives of the scientific community are expected to attend. The event will feature several poster sessions, breakout sessions, and a networking event to support the projects collaboration.